Conceptualized by the Scrum agile framework, a sprint designates a project cycle during which a certain number of tasks will follow one another in order to achieve a new phase of development of a product.

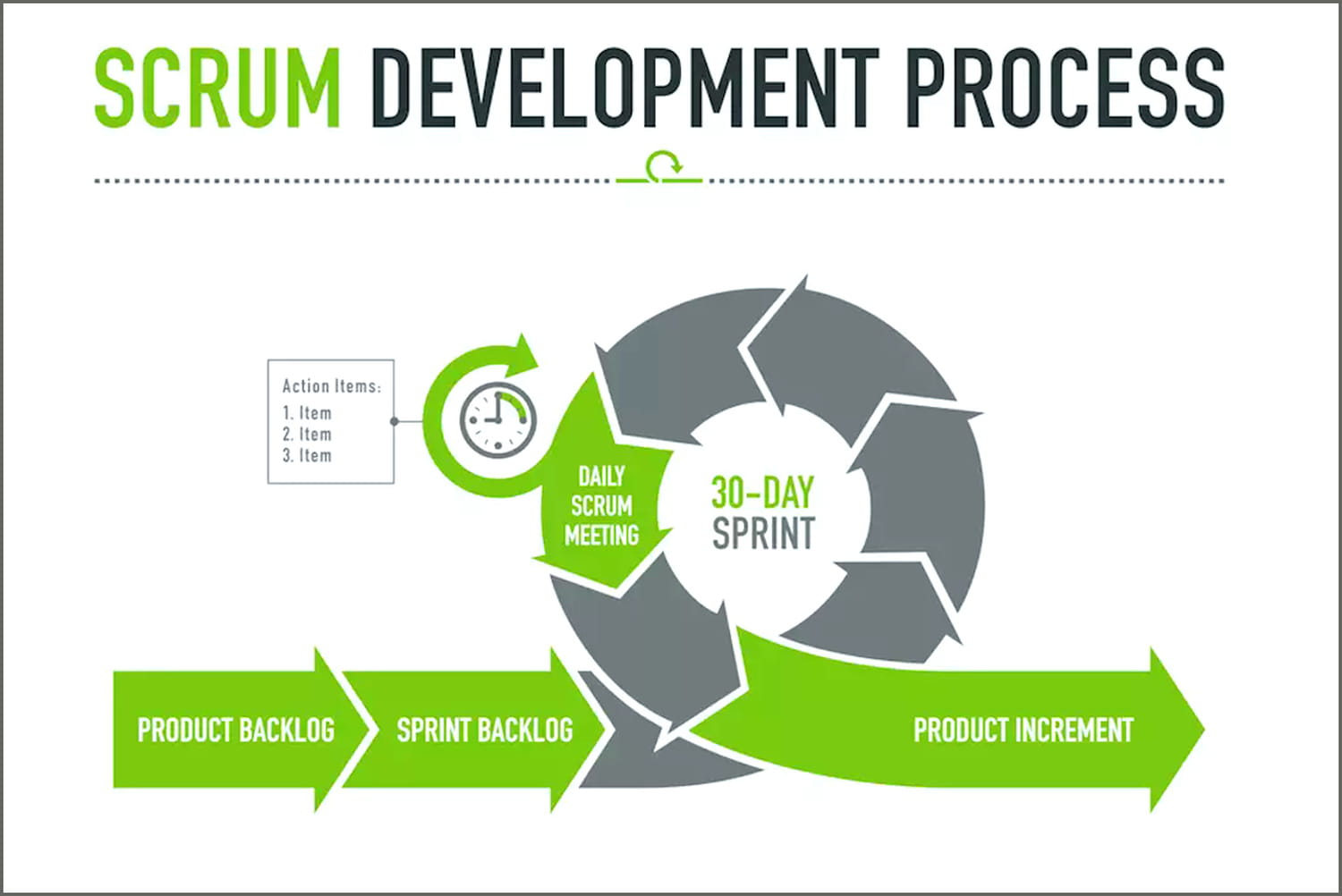

As its name indicates, an agile sprint is a sequential phase in the development of a product. By sprint we mean short iterations that break down an often complex development process to make it simpler and easier to readapt and improve according to the results of intermediate evaluations (see diagram below).

In the logic of agile methods, the objective is to start small and then improve the first version of a product by small iterations. This avoids taking too much risk. We get out of the tunnel effect of V-cycle projects that are cut into successive phases of analysis, specification, design, coding and testing. These projects are characterized by a single delivery at the end of the process, with no intermediate return visits to the business users. As a result, the product may no longer meet the needs of the field, which may have changed in the meantime.

Working in successive development sprints has several advantages. First, this process offers better control of the added value and quality of the final product or service. The iterations allow us to rectify the situation at any time according to customer feedback. As the first product is launched quickly, the return on investment is also often faster.

In the end, the sprint mode contributes to increasing customer satisfaction, whether they are internal users of the product or final customers. The latter feels taken into account throughout the development process.

As a general rule, the duration of a sprint varies between one and four weeks. The duration of each cycle will depend on the tasks defined as priorities and the time deemed necessary by the members of the project team to complete them. A sprint aims to achieve a single, specific product development objective.

If the notion of a sprint is well known to teams of developers, it is because it is the cornerstone of Scrum, the most widely used agile framework today. Hence the term “Scrum sprint”. In this method, the time frame of a sprint is determined in consultation with the members of the project team.

But Scrum is not the only agile method to rely on short development iterations. Other agile methods such as Extreme programming, Feature-driven development or Crystal Clear also work with rapid development cycles equivalent to sprints.

The four steps of the agile sprint

1. Sprint planning

The sprint planning is the first step of a sprint. It is a fairly codified event during which the development cycle is organized and the objectives to be achieved are clearly stated. The information relating to the development process must be known by all the members in order to facilitate their communication.

2. The daily meeting

The daily scrum meeting is an intermediate meeting that takes place during the sprint. It brings together the members of the development team. Objective: to update the plan to reach the objective according to what has been done.

Not to exceed 15 minutes, the daily scrum meeting is also an opportunity to discuss difficulties. If a debate is launched on a blocking point, it is then recommended to plan a meeting dedicated to the question and limited to the people concerned.

3. The sprint review

At the end of each sprint, a sprint review is organized so that the development team can present the increments brought to the product under development.

The meeting is an opportunity to review the progress of the product. Is it still in phase with the business needs? Is it necessary to make any adaptations? During the sprint review, the scope of the next sprint is also discussed.

4 The sprint retrospective

The sprint retrospective takes place after each sprint review. Business users are generally not invited. It provides the development team with a space for exchanging lessons learned during the sprint, and for working on ways to improve processes and tools. It is also an opportunity to review the relationships between team members and any problems that may have been encountered.

In agile language, the sprint retrospective is part of the principle of continuous improvement. The objective is for the next sprint to be more efficient than the previous one and so on. It is an empirical method, i.e. based on experience and self-learning.

The sprint backlog is not addressed by the Scrum framework. It is nevertheless widely used, even if it is not recommended because it is rather heavy to set up. It consists of gathering all the user stories (i.e. functional requests from business users) that the development team has committed to completing during a sprint. The progress of these requests will be represented on a kanban board (or scrum board). Each team member will thus have a common vision of the current sprint.

There are several types of user stories: development of functionalities, technical environments, bug fixes, etc. These are the finest units of work. They are always described from the point of view of the end user. They therefore relate the developer’s unit of work to the added value he or she will provide in the end.

Where the agile sprint refers to product development cycles, the design sprint refers to the upstream creation process. Inspired by design thinking, its purpose is to scrutinize a maximum number of ideas in a team. Generally lasting five days, it allows to quickly validate a product or service concept. The goal is to come up with one or two prototypes that will then be tested on real users on the last day.

Capitalizing on collective intelligence, sprint design aims to respond quickly to a business problem by defining a clear and proven product policy, accompanied by a development roadmap. Ultimately, the logic is obviously to accelerate the time to market while reducing the commercial risk. The main fundamentals of the design sprint: multidisciplinary team, unity of time and place, rapid prototyping and testing in real conditions.